Adding Artificial Intelligence to the Team

The Origin of the Idea

The Problem

In this article, we will discuss how to access a large language model (LLM), like NIPRGPT, and share basic knowledge about using one, asking it the right questions, and how a problem-solving AI assistant can catalyze your team.

We did not initially think of using AI when faced with the problem of adjusting our ICSM. We have used AI before on our smartphones and for personal projects. We have heard predictions from senior leaders like Andrew Evans, the Director of the Army’s Intelligence, Surveillance, and Reconnaissance Task Force, who said, “We must learn to leverage AI to organize the world’s information, reduce manpower requirements, make it useful, and position our people for speed and accuracy and delivering information to the commander for decision dominance.”2 Still, in our work we never really saw a current, practical application for AI. The idea of asking a LLM to “generate an information collection plan” seemed far-fetched. We doubted it would produce anything coherent or usable. However, we were out of viable options when we ran into the ICSM problem.

Our unit, the 11th Airborne Division, is the Army’s newest division; consequently, we had a fraction of the manning of other Army divisions. At any given time, only three collection management Soldiers were working at our command post. We could not realistically collaborate and synchronize our efforts, whether internally with the team or externally with the rest of the staff, quickly enough to re-create and refine a quality product in the available time. The ICSM often incorporates over 670 data points, with tens of thousands of options for how and when to collect the information needed. Given the small staff and limited time available, the plan was sure to have inefficiencies and errors where we missed certain named areas of interest (NAIs), enemy formations, or targeting priorities requiring a collection focus. Although we applied an A-plus effort, by the end of our rushed edits, it felt like we were stuck with a C-minus product.

As we brainstormed, we found more issues. How could we ensure that our changes did not create redundant collection or gaps in coverage? How could we mix collection assets effectively without spending hours on manual adjustments? We knew that AI could provide some text-based solutions if we needed help writing Annex L (Information Collection), but the ICSM is a product that often needs to be communicated in a format best represented by a spreadsheet. LLMs can’t produce spreadsheets. We needed a solution that could manage the complexity of our data and the urgency of our situation.

The Solution

We started asking basic questions on commercially available Generative Pre-training Transformer (GPT) services using prompts like, “Can you make a schedule for three people who cannot be in the same place at the same time?” “Can you coordinate for each of those three people to visit ten different parks during a 24-hour period?” “Can you make sure that each of those people is at those parks for multiple hours?” And, finally, “Have the first person focus on parks 1 through 3.” We reasoned that this generic situation could represent the problems we faced with the ICSM’s development. Surprisingly, these prompts generated text-based answers that were very promising. We realized, though, that while a commercial GPT service could be helpful, its results were not useable. Since we were working with collection assets and operational planning, we needed to find a tool already familiar with Army doctrine and operations available on both controlled unclassified and classified systems.

We began researching Department of Defense LLMs that fit our requirements and identified several options. The most helpful and easiest to use on unclassified systems were NIPRGPT and CamoGPT3, but NIPRGPT, specifically, was more suited to our purpose and became our preferred app for testing the integration of AI into our team.

Through trial and error, we could make the LLM work for us rather than the other way around. Our desired end product was a copy-and-paste-worthy ICSM publishable as a division fighting product during a warfighter exercise. By using an AI assistant, we turned an error-prone process that cost us hours of time and included some emotional strain into a process that took minutes, had minimal errors, and allowed us to think about “big picture” problems instead of grinding out updated schedules for a dozen or more collection assets.

Ours was a niche problem set; however, the practical ways we applied AI may also apply to a variety of similar work issues. Accessing NIPRGPT is simple; after that, it is just a matter of asking the right questions.

Creating a NIPRGPT Account

Using your NIPR government email and user certification to authenticate your identity via the Department of Defense Global Directory, you can create a NIPRGPT account and access the platform. The NIPRGPT chat function, which provides the greatest familiarity to most users, allows users to engage in a conversation with the AI platform. The platform’s developed algorithm answers users’ questions based on a text database that is current as of December 2023. Responses to inquiries are “generated answers,” meaning that the platform creates new information from its database. The platform also has a “Workspace” function that enables users to conduct queries of text-based uploaded documents such as articles, doctrine, or white papers. Additionally, the platform offers multiple help options for users who are unfamiliar with AI applications.

Our team’s accounts were created within five minutes of applying, and we began testing the LLM. Our requests did not require approval by supervisors or other security managers—unlike many Army programs, access to NIPRGPT needed no other credentialing. Finally, unlike commercial LLM subscriptions, there is zero cost to the unit.

Asking the Right Questions

The turnaround time for producing an AI-assisted product depends on asking the right questions. As we experimented with our inputs, key phrases and words like “text-based representation” and “spreadsheet” helped the AI tool understand the baseline product we wanted to create. Specifying numbered rows and lettered columns also helped communicate adjustments to the product’s layout.

The AI tool excels in its ability to ingest rules and requirements and make on-the-spot adjustments. For example, if a user inputs a rule like, “no information collection asset can collect on an area for more than 2 hours,” the AI tool will immediately change pre-coordinated collection timelines to comply with the new conditions. Setting up your rules and requirements at the beginning of product creation shortens the refinement process while minimizing the chances of human error that could result in coverage gaps and redundant collection.

Unfortunately, the chat function cannot retain rules and conditions from previous conversations. This is a known issue that NIPRGPT creators are working to address. Until the issue is resolved, users must re-enter the rules and questions at the beginning of each new chat to return to the desired baseline product.

The chat is also very literal, sometimes requiring users to refine their questions or requests. For example, if a user asks the chat to make an Excel sheet or a spreadsheet, it will reply that it cannot do so—but it will give you step-by-step instructions on how to use Microsoft “Excel” to create a spreadsheet on your own. However, rewording the request to ask for a “text-based representation” of a spreadsheet will result in a spreadsheet that can be copied and pasted into an Excel workbook. Working through these minor issues is a simple learning process that is more than worth the minutes of effort.

After some trial and error with setting rules and asking the right questions, we had a working set of instructions on how to start a conversation with the LLM to produce the AI-adjusted products we needed within seconds. The LLM even produced a written summary of the adjustments we made to the instructions, which was a great help for understanding the fine-tuning process and rapidly created shared understanding across the collection management team on effectively using this tool.

A Practical Example

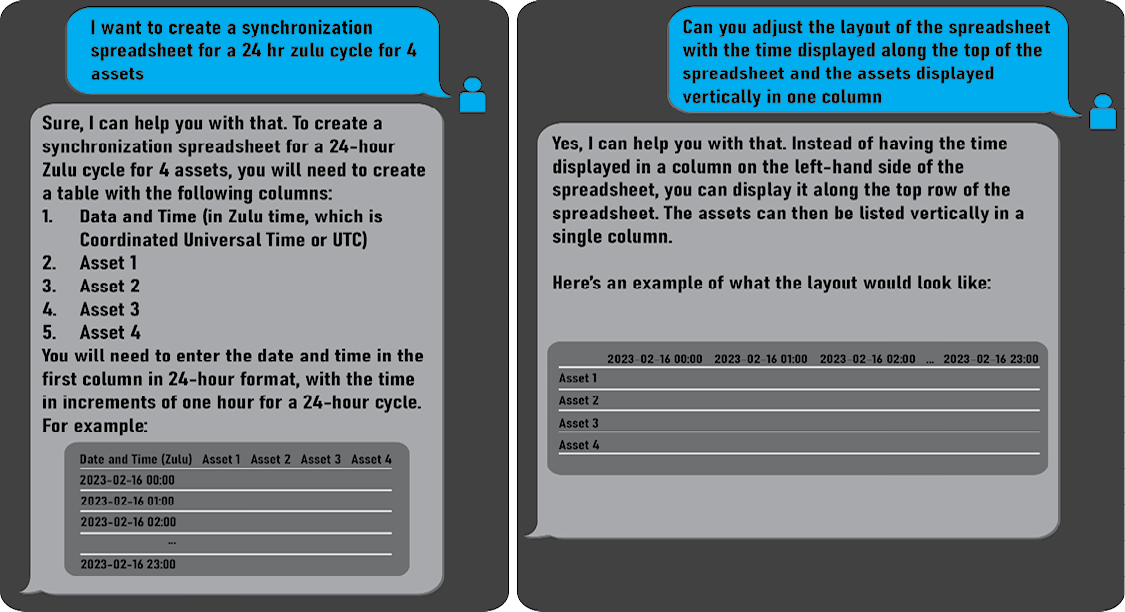

Building the rules and asking the right questions is an iterative process. In this example, we prompted NIPRGPT to help us refine our ICSM. We began by stating the product’s intent and providing some basic information. The initial interaction (Figure 1) was a request to build a synchronization spreadsheet for a 24-hour period with four assets.

Figure 2 reflects a request to adjust the spreadsheet’s layout to swap the information between the columns and rows, reassigning the time as column headers with the assets naming each row.

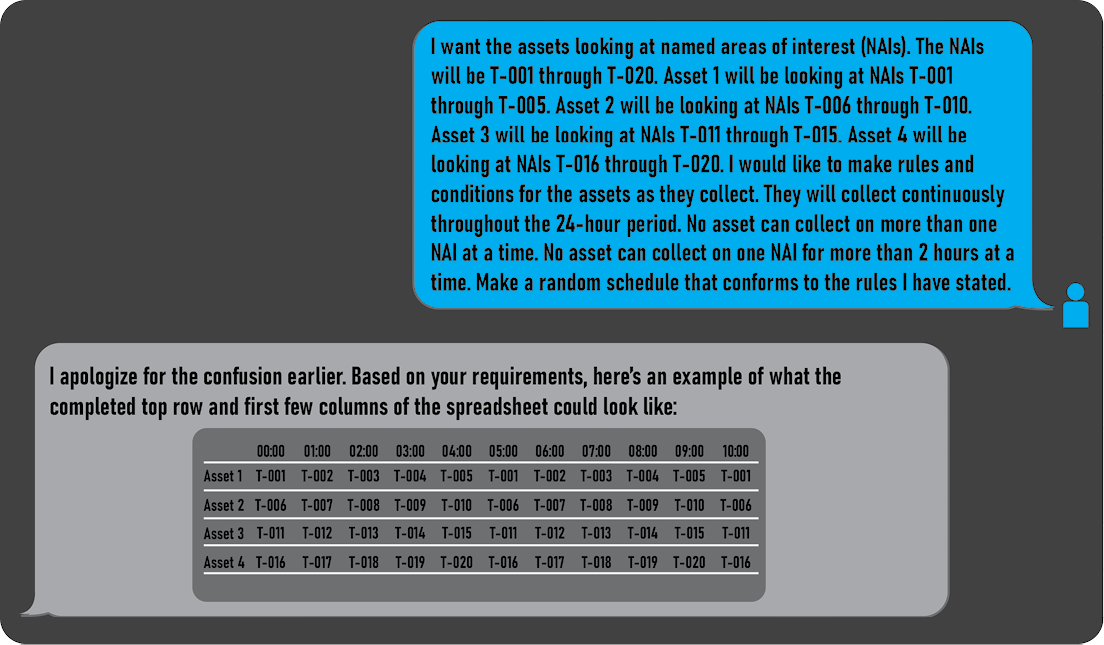

After establishing the product layout, we provided the NAIs that needed to be built into the collection plan (Figure 3). These were numbered T-001 through T-020. Each asset was assigned specific NAIs for collection. We placed rules and conditions on the assets’ collection scheme. The LLM then created a prioritized ICSM based on the information we provided.

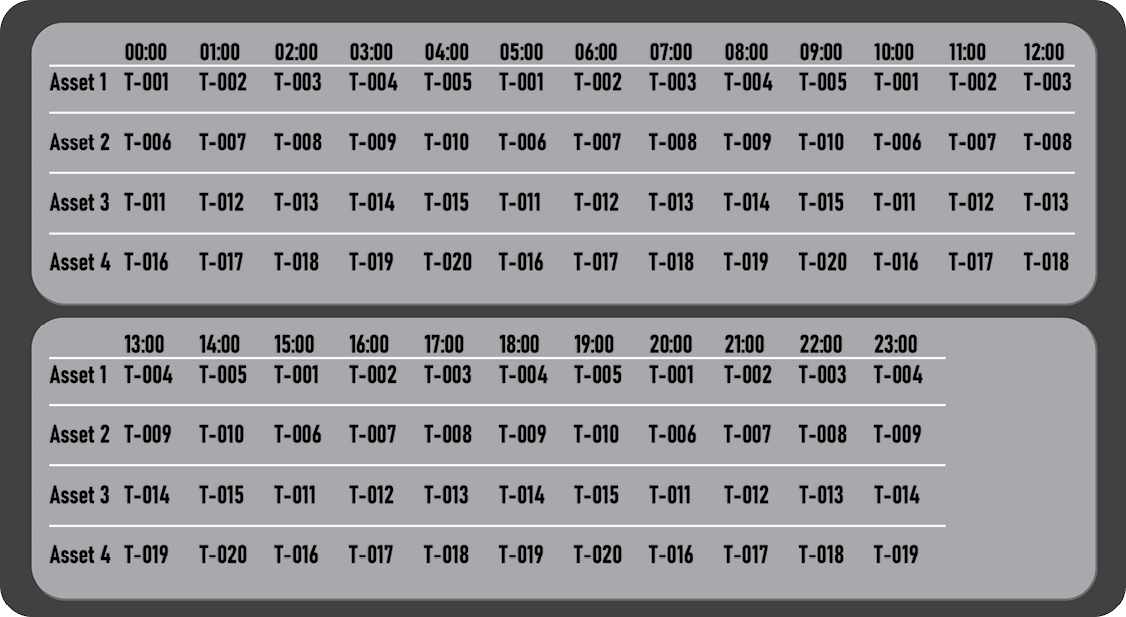

Once the ICSM was created, we set specific collection requirements. At that point, we could also request a summary of each asset and NAI by total collection time to provide a holistic understanding and assessment of the collection plan. Figure 4 illustrates this end product, which we copied and pasted into an Excel spreadsheet without adjustments, requiring minimal user labor.

Other Potential Uses for Large Language Models

As our team continues to grow in understanding of how LLMs work, we can recognize many other potential applications. Examples include brainstorming priority intelligence requirements (PIRs), providing generalized indicators of enemy intent for the information collection matrix, and assisting with generating Annex Ls that are easier to digest for our subordinate units.

LLMs can be helpful when writing PIRs for different division operations. Instead of asking, “Can you write PIRs for our division operation?” we begin by describing some of the operation’s mission variables—for example, “We are a division in the offense that is planning to use an air assault in a forested environment with rolling hills while facing a threat the size of a brigade that is set in an established defense. What are the recommended PIRs?” Typically, this will result in a list of some example PIRs with a doctrinal breakdown by mission variables:

- Enemy.

- Determine the location, range, and effectiveness of the air defense.

- Locate and assess vulnerabilities of the threat’s command and control.

- Determine where the threat’s reserve is and how it will be committed.

- Terrain.

- Determine the weather patterns that will affect air assault operations.

- Locate key terrain for landing areas around the objectives.

- Time.

- Determine key moments of vulnerability in the threat’s air defense, such as maintenance times or cloud cover, for a defense that isn’t radar-assisted.

- Civil Considerations.

- Determine how civilians will interfere with movement or how they will attempt to leave the conflict area.

Although the PIRs are broad and require additional work to tailor them before publishing, they are an excellent starting point. The LLM allows users to rapidly structure their own questions and form the recommended PIRs for the division commander.

When humans create an information collection matrix, they often run out of ideas or fail to consider all warfighting functions when assessing indicators of enemy intent. LLMs can provide valuable assistance in thinking through different factors, and they can offer example indicators that we can sort through for our specific operation. For example, consider the following LLM query: “What are some indicators of a threat rotary wing attack battalion planning a long-range assault into an American division’s area of operations? Account for American tactical air defense and threat strategic enablers.” The LLM will produce a list of indicators that includes increased reconnaissance activity, forward deployment of forces, increased logistical support, preparations for suppressing enemy air defenses, enhanced communications, electronic warfare and cyber operations, use of strategic assets, pre-assault reconnaissance, simulation and training, and civilian information operations.

These are only a few examples of AI’s potential applications on the battlefield. Our only limits are our creativity and willingness to experiment with finding the right questions to ask.

Not the Tool for Every Task

While an LLM can help make tasks more efficient, it is not a suitable tool for every task. It is important to understand the limitations and weaknesses of LLMs in the field. For example, an LLM is a poor tool choice when sourcing direct quotes or gathering specifications on equipment, and although it is a powerful assistant it cannot do our jobs for us.

LLMs are not designed to pull direct quotes from doctrine or other published material. The NIPRGPT model is not intended to reference specific sources or documents directly; instead, it generates responses based on a broad survey of resources. This means that the LLM generates a response that a source could say or extrapolates what that source would say rather than directly referencing what that source did say. First Lieutenant Nicholas Brooks, one of the designers of NIPRGPT, recommends finding direct quotes using internet search functions. The NIPRGPT model is not connected to current internet content, so it may not reflect the exact wording or context of a specific quote or doctrinal reference.4

Likewise, LLMs are not well-suited for gathering equipment capabilities. The models’ responses are based on a wide range of sources and may not always reflect the most accurate or up-to-date information. For this type of information, it is always best to refer to official documentation, internal running estimates, and technical manuals. Once that information is in hand, it can be included in the LLM rules. This will result in more accurate assessments when the model is asked to help with understanding the best uses for specific capabilities.

AI can be a valuable teammate when generating ideas or providing information, but it cannot replace thorough planning or team collaboration. In his October 2024 appearance on The Convergence Podcast, Lieutenant Colonel Blaire Wilcox noted that “[AI] makes professionals better. It doesn’t necessarily make amateurs or the inexperienced [into] professionals.”5 There are no shortcuts to good professional military staff work—but there are catalysts. While AI models cannot understand the nuances of a specific situation or develop a plan independently, they can help generate ideas and prevent the kind of human errors that can be created when processing substantial amounts of data, as was our situation with the ICSM.

By treating AI like any Soldier, we can trust it to provide the best information it has. As with any team member, though, it is important to conduct regular inspections and reviews to ensure that the information it provides is accurate and relevant while continuing to coach it to improve its performance continuously.

Conclusion

Integrating AI into staff processes, specifically a LLM like NIPRGPT, has proven to be a valuable tool for streamlining tasks and providing a new level of analysis in the 11th Airborne Division. We used it to adjust our ICSM quickly and continue to find other uses for it as we develop our standard operating procedures. The practical applications across all staff processes in a G-2 section, the staff sections of the other warfighting functions, and beyond into other echelons of command are limitless.

We cannot allow ourselves to perceive AI as a tool that needs to be perfect and provide independent answers without human input and analysis. It must be employed practically. As our experience demonstrates, the practical application of AI has the potential to improve the quality and efficiency of any team’s performance. How can you add AI to your team?

Endnotes

1. Mikayla Easley, “Army Implements Generative AI Platform to cArmy Cloud Environment,” DefenseScoop, September 10, 2024, https://defensescoop.com/2024/09/10/army-generative-ai-capability-carmy-cloud/.

2. Mark Pomerleau, “Army’s ISR Task Force Looking to Apply AI to Intel Data Sets,” DefenseScoop, October 17, 2024, https://defensescoop.com/2024/10/17/army-isr-task-force-apply-ai-intel-data-sets/.

3. Lori McFate, “Operationalizing Science at JMC with Artificial Intelligence and Machine Learning,” U.S. Army Worldwide News , October 29, 2024, https://www.army.mil/article/280941/operationalizing_science_at_jmc_with_artificial_intelligence_and_machine_learning/.

4. First Lieutenant Nicholas Brooks, telephone discussion with authors, n.d.

5. Luke Shabro and Matt Santaspirt, “107: Hybrid Intelligence: Sustaining Adversary Overmatch with Dr. Billy Barry, LTC Blair Wilcox, & TIM,” October 25, 2024, in The Convergence, produced by The Army Mad Scientists, podcast, 58:52, https://theconvergence.castos.com/episodes/107-hybrid-intelligence-sustaining-adversary-overmatch-with-dr-billy-barry-ltc-blair-wilcox-tim.

MAJ Wesley Wood currently serves as the collection manager for the 11th Airborne Division. He previously served as the Deputy G-2 for the 11th Airborne Division, as the Airborne Task Force Observer, Controller, Trainer at the National Training Center, and as the Commander, Military Intelligence Company, 1st Stryker Brigade Combat Team, 25th Infantry Division, Fort Wainwright, AK.

SGT Derrion Robinson currently serves as a collection management noncommissioned officer for the 11th Airborne Division. He previously served as a fusion analyst in the 4th Infantry Division G-2, as a security manager in the 4th Infantry Division G-2, and as a S-2 analyst at the 1st Battalion, 41st Infantry Regiment, Fort Carson, CO.